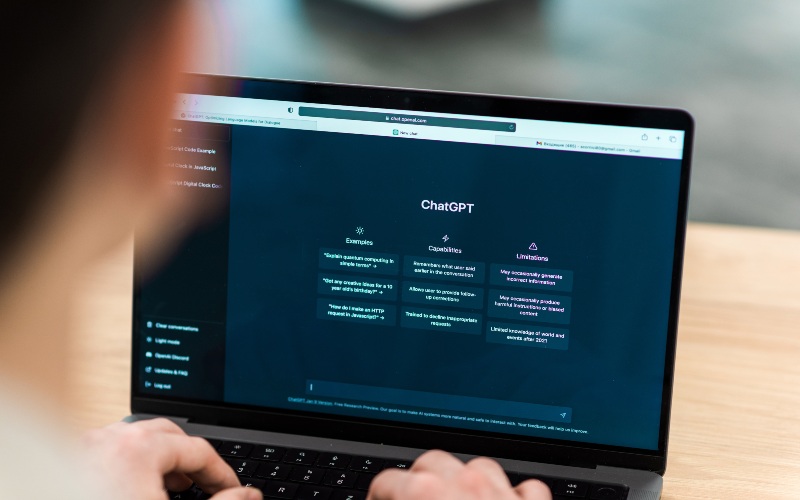

OpenAI denies ban on legal and medical topics in ChatGPT

OpenAI officials have clarified that no change has been made to ChatGPT’s core capabilities or policies.

OpenAI has moved to quash a wave of online speculation claiming that its flagship AI platform, ChatGPT, has been “banned” from giving legal and medical advice.

The company confirmed Monday that there has been no new policy prohibiting such responses, only a reaffirmation of its long-standing safety guidelines.

More To Read

- Zoom’s AI Companion 3.0 goes browser-first as it targets casual users

- Google raises AI stakes as OpenAI struggles to stay on top

- Google launches Workspace Studio, enabling anyone to build Gemini-powered AI agents

- ChatGPT could soon tap into Apple Health data for personalised wellness insights

- Gulf region paves the way to become digital data hub

- Apple names new AI chief amid pressure to catch up

The confusion began after several social media posts and tech blogs circulated screenshots alleging that ChatGPT had stopped responding to questions about medical conditions and legal issues.

The posts quickly went viral, sparking fears that OpenAI had implemented a blanket ban on professional-related topics.

However, OpenAI officials have since clarified that no change has been made to ChatGPT’s core capabilities or policies.

The AI model continues to provide general educational information about law, health, and finance, but not personalised professional advice that would require a license or certified expertise.

“Nothing has changed. ChatGPT still provides general information in these areas, as it always has,” an OpenAI spokesperson said in a statement. “Our policy remains the same: users should not treat AI outputs as a replacement for professional advice.”

The misunderstanding appears to stem from OpenAI’s October 29 update to its usage policy page, which reorganised sections dealing with “high-risk applications” such as health, finance, and legal assistance.

While the update used firmer language around responsible use, warning users not to rely on ChatGPT for decisions that could cause “serious harm”, it did not impose new restrictions on general discussion of those topics.

Industry analysts say the rumour spread because of how the new wording was interpreted.

“The phrasing about ‘not providing advice’ was taken literally,” said AI governance researcher Dr Lisa Okafor. “But in policy terms, OpenAI was restating an existing principle, not creating a new rule.”

Other Topics To Read

Top Stories Today