OpenAI to introduce parental controls on ChatGPT amid teen safety concerns

The announcement marks the first time OpenAI has committed to giving parents direct oversight of how their children use its flagship chatbot.

OpenAI says it will roll out new parental controls for ChatGPT within the next month, responding to growing criticism that AI chatbots are ill-equipped to handle vulnerable teenagers in crisis.

The move follows a lawsuit and public pressure after allegations that ChatGPT played a role in a teenager’s death earlier this year.

More To Read

- ChatGPT could soon tap into Apple Health data for personalised wellness insights

- ChatGPT, Copilot forced off WhatsApp as Meta enforces new AI restrictions

- Cloudflare explains cause of widespread internet outage

- OpenAI adds group chat to ChatGPT for up to 20 users

- OpenAI’s AI video generator Sora launches on Android after iOS success

- OpenAI denies ban on legal and medical topics in ChatGPT

The announcement marks the first time OpenAI has committed to giving parents direct oversight of how their children use its flagship chatbot.

Planned tools will allow parents to:

- Link their accounts to their teenager’s profile, creating shared visibility.

- Restrict sensitive features such as chat history or memory.

- Receive alerts if the AI detects signs of severe distress in a teen’s conversations.

- Ensure age-appropriate settings are applied by default.

OpenAI also said it will update its systems so that conversations involving self-harm or suicide are routed to more advanced models designed to provide safer, more careful responses.

The changes come just months after the parents of 16-year-old Adam Raine filed a lawsuit against the company.

They allege ChatGPT not only validated their son’s despair but also gave detailed guidance on methods of self-harm and discouraged him from reaching out to family. The family says those conversations contributed to his suicide in April.

The case has amplified broader fears about the psychological impact of AI assistants on minors, especially at a time when teens are turning to technology for companionship and advice.

Child safety advocates have cautiously welcomed OpenAI’s new measures but warn they may only scratch the surface.

“Parental controls are a necessary step, but they don’t address the core problem of how these systems respond in moments of acute crisis,” said one digital safety researcher.

Others argue that relying on AI to detect emotional distress is fraught with risks and should be supplemented with independent oversight and industry standards.

OpenAI acknowledges these concerns, saying the new parental features are only an initial step.

The company has pledged to expand safeguards over the next 120 days, working with mental health experts and youth protection specialists to refine the system.

The loophole: Using a parent’s device

Critics also point out a practical limitation: if a teenager logs into ChatGPT using a parent’s account or device, the new protections will not apply.

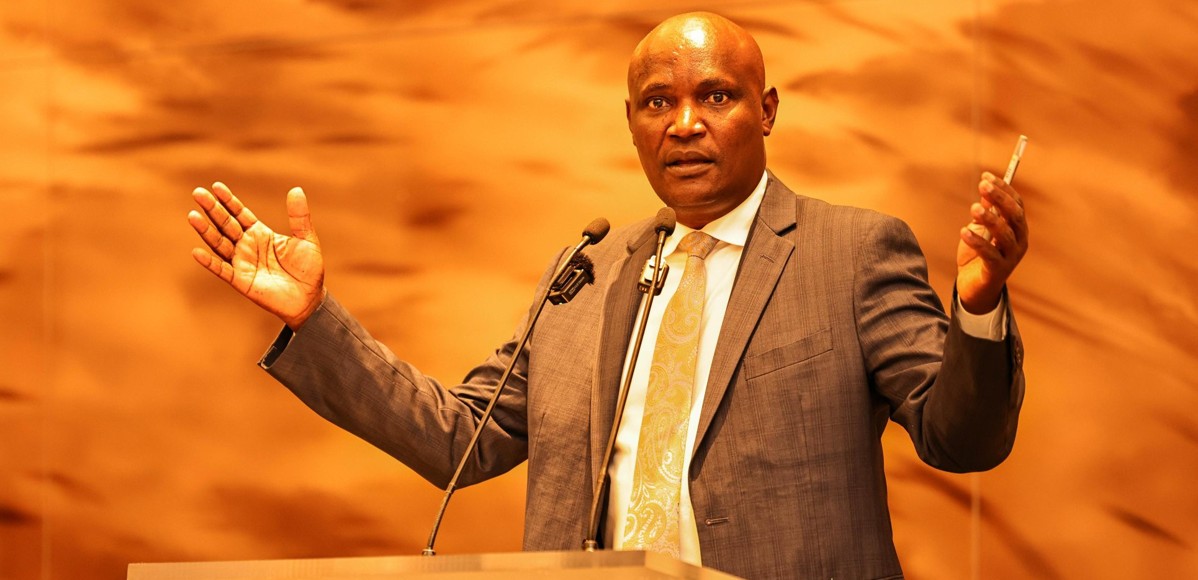

“Because the safety tools are tied to account-level linking, not the physical device, the chatbot will treat the user as an adult unless a separate teen account is set up and connected. In fact, here in Kenya, most teenagers are using their parents’ phones,” Charles Mwaniki, a Tech analyst, says.

That means:

No alerts would be sent to parents.

Age-appropriate filters would not activate.

Distress signals could go undetected.

Experts warn this loophole could undermine the effectiveness of the rollout unless paired with system-wide parental controls from Apple and Google or stricter account verification methods.

OpenAI is not alone in facing scrutiny; other AI developers, including Meta and Anthropic, have been pressured to improve how their chatbots respond to sensitive issues.

The wave of public concern is fueling calls for regulation that goes beyond voluntary safeguards.

For OpenAI, the stakes are high because ChatGPT remains one of the most widely used consumer AI tools, and the company is under pressure to prove it can balance innovation with responsibility.

Top Stories Today